Some commonly used statistical concepts are hard to master not only for most patients, but for many professionals as well. Especially when it comes to cancer screening

A common assumption, when addressing informed decision making in medicine, is that many patients find it hard to grasp statistical concepts, and the meaning of evidence, due to inadequate health literacy. But the assumption might be wrong, or at least oversimplistic, according to a growing body of evidence published in literature in recent years: statistical concepts, and the meaning of evidence, might be still beyond the comprehension of many health professionals, with reflexes that are particularly evident when it comes to cancer screening.

“Many physicians do not know or understand the medical evidence behind screening tests, do not adequately counsel (asymptomatic) people on screening, and make recommendations that conflict with existing guidelines on informed choice” writes Odette Wegwarth, from the Harding center for risk literacy in Berlin, in a recent article published on PlosOne. That research (PLoS ONE 2017 12(8): e0183024) evaluated how providing people with simple evidence-based information affected their willingness to heed their doctor’s advice, often unscientific.

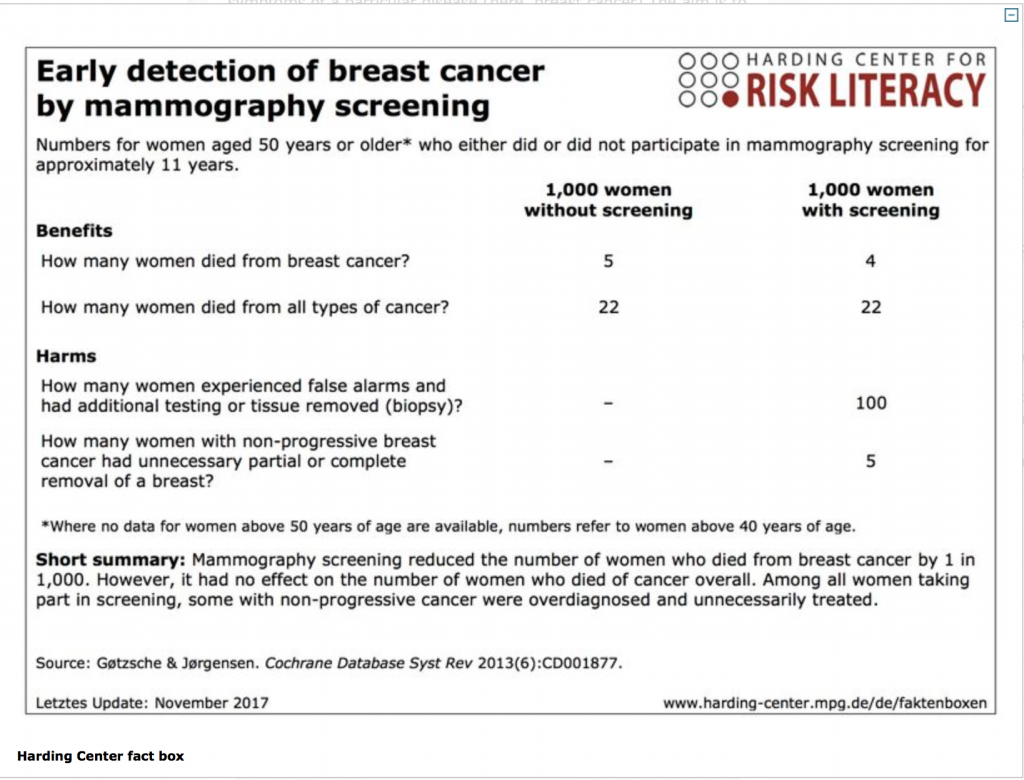

“Around 80% of doctors do not understand statistics even in their field, not even basic concepts like the difference between absolute risk and relative risk” explains Gerd Gigenenzer, who directs the Harding center and co-authored that article. “The basic problem is that statistics is taught in such a way that students often don’t see the connection with medicine. Then as doctors they are subject to the combined pressure of defensive medicine and of monetary incentives”. Gigerenzer has published several books on risk perception and decision making (including Better Doctors, Better Patients, Better Decisions for MIT press in 2011), and many research articles and editorials to discuss both technical aspects related to statistics and more subtle distortions of the available data. “Communication about cancer screening is dodgy: benefits are overstated and harms downplayed. Several techniques of persuasion are used. These include using the term ‘prevention’ instead of ‘early detection’, thereby wrongly suggesting that screening reduces the odds of getting cancer. Reductions in relative, rather than absolute, risk are reported, which wrongly indicate that benefits are large. And reporting increases in 5 year survival rates wrongly implies that these correlate with falls in mortality” he wrote in 2016 in an editorial on the British Medical Journal (BMJ 2016;352:h6967). To counter the imbalance, Gigerenzer proposed a “fact box”, that has been since updated and is available online, juxtaposing all pros and cons of mammography described in simple language, and expressed in natural frequencies (see box).

What are natural frequencies?

“Natural frequencies are frequencies that correspond to the way humans encountered information before the invention of books and probability theory. Unlike probabilities and relative frequencies, they are ‘raw’ observations that have not been normalized with respect to the base rates of the event in question. For instance, a physician has observed 100 persons, 10 of whom show a new disease and the others do not” reads the glossary of technical terms published by Gigerenzer’s Harding center for risk literacy, along with fact boxes, with explanatory texts, on screening for ovarian, prostate and colon cancers.”Of these 10, 8 show a symptom, whereas 4 of the 90 without disease also show the symptom. Breaking these 100 cases down into four numbers (disease and symptom: 8; disease and no symptom: 2; no disease and symptom: 4; no disease and no symptom: 86) results in four natural frequencies 8, 2, 4, and 86. Natural frequencies facilitate Bayesian inferences. For instance, if observing a new person with the symptom, the physician can easily see that the chances that this patient also has the disease is 8/(8 + 4), that is, two-thirds. If the physician’s observations, however, are transformed into conditional probabilities or relative frequencies (e.g., by dividing the natural frequency 4 by the base rate 90, resulting in .044 or 4.4 percent), then the computation of this probability becomes more difficult and requires Bayes’s rule for probabilities. Natural frequencies help people to make sound conclusions, whereas conditional probabilities tend to cloud minds”.

“Cancer screening poses a particularly hard challenge also because in order to evaluate the available figures one needs to apply counterfactual reasoning, and consider what would have turned out differently if at some point before that event – such as a cancer diagnosis – a different decision was taken” says Roberto Satolli, a cardiologist turned journalist who headed for 9 years the ethics committee of the National Cancer Institute in Milan, the first such committee in Italy that was funded in 1973 by Umberto Veronesi and Giulio Maccacaro. Satolli, who currently heads the ethics committee of the health authority of Northern Emilia, is among the authors of an ongoing trial on informed consent led by Paola Mosconi, from Milan’s Mario Negri Institute for pharmacological research (the protocol was published on BMC Cancer. 2017 Jun 19;17(1):429). Mosconi and colleagues are trying to avoid being paternalistic towards the women invited by heath authorities to enroll into the screening programmes, also in the light of disagreements and misunderstandings on statistics. “Although the debate on mammography screening is lively, most stakeholders – physicians, policy-makers, lay people or patients’ associations – agree on the need to inform women properly, and consider this an ethical obligation. The current debate on mammography screening adds a new challenge: how disagreement among scientists, such as uncertainties about the estimates especially of potential harms from the screening, should be managed, and how public health institutions and scientists should cope with communication when there are data interpretation divergences and conflicts” Mosconi explains.

A strong prejudice in favor of screening

“We have a strong prejudice in favor of earlier diagnosis, and it took us many years to realise that earlier diagnosis also inevitably brings to overdiagnosis, as we call the diagnosis of a disease that will never cause damage nor symptoms during a patient’s ordinarily expected lifetime” Satolli continues. “Many clinicians wrongly assume that overdiagnosis corresponds to false positives, but these are different concepts. We can calculate the rate of false positives (healthy patients who are wrongly diagnosed with the disease), but we cannot measure exactly overdiagnosis, because the act itself of diagnosing alters the picture, introducing a pressure to start some treatment”.

The multi-centre trial uses an online interface to evaluate the amount of information that women enrolled in three different local screening programmes in Turin, Florence and Palermo are willing to receive before taking a decision. The first screen offers basic data, with hyperlinks leading to in-depth explanations in a language progressively more technical. The behaviour of women interacting with the online platform will then be compared to those receiving a more traditional paper leaflet.

“When the woman clicks on the ‘accept’ button, the system asks one more time: ‘are you sure?’ before completing the procedure. The challenge consists in letting each individual participant make up her mind, without assuming that there is a fixed threshold for the amount of numerical data that everyone should compute: in fact in real life no decision is taken simply based on figures, not even in the clinical setting” Satolli argues. “Most numbers are important for public health, but they are not always the most relevant factor in individual decisions, which ideally should be reached with the physician”.

Provided, according to Paolo Bruzzi – clinical epidemiologist at the Italian National Cancer Institute in Genoa – that the physician knows how to deal with uncertainty: “Part of the problem lies in the mindset of most medical students which is still very mechanistic and deterministic, and struggles with uncertainty. We always look for certainties, but good statistics increases uncertainties. The task of the physician consists in assimilating and conveying the principle that every statement in medicine is based on uncertainty”.

The importance of statistics in medicine has been recognised only in recent decades, and according to many experts even basic concepts are still widely misunderstood and misused by researchers. Take the so called p-value: an apparently simple measure of reliablity of a result that is in fact not simple at all to use, and frequently lends itself to inappropriate interpretations (see also “Navigating uncertainty in the era of MMM“). Another element of concern is the tendency to focus on arbitrary cut-off levels that are supposed to distinguish between what is effective and what is useless, ending up with a black and white description of a scenario that instead needs colors and shades of grey.

According to Ingrid Mülhauser, epidemiologist at the University of Hamburg and a specialist of evidence-based patient information, there is not a need for more statistics, but rather for better statistics: “For instance, in Germany many women undergo mammography before age 50, with a lot of overdiagnosis. The important message in this case is that overdiagnosis exists, even though we cannot quantify it precisely”.

No math beyond percentages is really needed

Statistician David Spiegelhalter, who teaches public understanding of risk at the University of Cambridge and currently heads the UK Royal Statistical Society, also agrees that the lack of statistical literacy is widespread, “but there is no need for any mathematics beyond percentages, best expressed as ‘what would we expect to happen to 100 people?’”.

On the other hand, he says, “statistics can be tricky things: not because of the mathematics, but because of the difficulty of interpreting them appropriately, and the ease with which they can be used to advance a particular argument. It is crucial to understand how even simple percentages can be manipulated to tell the desired story, for example using relative rather than absolute risks, mortality rates rather than survival rates, or quoting increased 5-year survival rates when all that has happened is that the age at diagnosis has been reduced” he adds.

On the other hand, he says, “statistics can be tricky things: not because of the mathematics, but because of the difficulty of interpreting them appropriately, and the ease with which they can be used to advance a particular argument. It is crucial to understand how even simple percentages can be manipulated to tell the desired story, for example using relative rather than absolute risks, mortality rates rather than survival rates, or quoting increased 5-year survival rates when all that has happened is that the age at diagnosis has been reduced” he adds.

“One should always keep in mind that clinical trials answer a very raw question, and are based on the assumption that the results on the ‘average patient’ can be generalized” argues oncologist Paolo Casali, who works on sarcomas and rare cancers at the Italian National Cancer Institute in Milan. “There never was a methodology for clinical decision-making: it has been the object of studies since the ’50, but it never reached the patient’s bed. We are now at a time when methodology is going to change. Today we need a multidisciplinary approach that calls for a greater involvement of clinicians and information technology experts. The use of artificial intelligence and machine learning will introduce new methodological challenges” Casali predicts.

This will likely require to update the curricula not only of active and future reseaechers but also to provide physicians, medical students and all health professionals with opportunities for looking at the results of clinical trials through better lenses.

Despite the possible future adoption of new statistical methods by researchers, though, Spiegelhalter is convinced that the basic concepts will stay the same: “There will always be some disagreement about how to interpret the available evidence, and uncertainty about the precise balance of potential harms and benefits of an intervention. While it is good to try and resolve disputes, given the wide variation in patient preferences, rough magnitudes should generally be good enough”.

Same mistakes after 40 years?

“As both the number and cost of clinical laboratory tests continue to increase at an accelerating rate, physicians are faced with the task of comprehending and acting on a rising flood tide of information. We conducted a small survey to obtain some idea of how physicians do, in fact, interpret a laboratory result”. It was 1978 when Ward Casscells and colleagues from the Harvard School of Public Health published their small survey (N Engl J Med 1978; 299:999-1001), based on a pretty simple question: If a disease has a prevalence of 1 in 1000, and a particular test for it has a false positive rate of 5%, and one random person tests positive for the disease, what is the likelihood that they have the disease? “Eleven of 60 participants, or 18 per cent, gave the correct answer” they wrote. “The most common answer, given by 27, was 95 per cent, with a range of 0.095 to 99 per cent. The average of all answers was 55,9 per cent, a 30-fold overestimation of disease likelihood”.

I agree with the author and colleagues. Biostatistics is a growing topic with continuous development of new techniques. With a computer and the aid of many websites, even the most sophisticated statistical analyses can be done. These technical revolutions mean that the boundary between the essential statistics and the more advanced statistical methods has been blurred.

I am a thoracic surgeon, and the understanding of biostatistics is essential to all surgeons, as most of them received some statistics lessons in their training. Nevertheless, I think that few surgeons sit down to read statistics books. What surgeons need is to take tiny doses of biostatistics, absorbed rapidly.

Unappropriated or wrong statistical analysis, words of great concern when we read them in reviewers’ comments.

Hence, many journals launched a series of invited reviews about statistics in surgical research. These articles will only scratch the surface of medical statistics and will provide a stimulus to enhance the skills to interpret statistical analyses.

Translating data into statistics is a beautiful, necessary and reductionist task. Percentages are words. The “public” needs to be fed words, not numbers. Selecting the correct percentages is a highly ethical task.